Each record consists of one or more fields, separated by commas. The use of the comma as a field separator is the source of the name for this file format. A CSV file typically stores tabular data in plain text, in which case each line will have the same number of fields.

Instead, you should use python's built-in csv module. I'm fine with specifying the split as @lhoestq suggested. My only concern is when I'm loading from python dict or pandas, the library returns a dataset instead of a dictionary of datasets when no split is specified.

I know that they use a different function Dataset.from_dict or Dataset.from_pandas but the text/csv files use load_dataset(). However, to the user, they do the same task and we probably expect them to have the same behavior. A comma-separated values file is a plaintext file with a .csv extension that holds tabular data.

This is one of the most popular file formats for storing large amounts of data. Each row of the CSV file represents a single table row. The values in the same row are by default separated with commas, but you could change the separator to a semicolon, tab, space, or some other character. '#' is defined as a comment character so 'row ' is ignored in all rows.

As the first row as names parameter is set to true, att1, att2, att3 and att4 are set as Attribute names. The Attribute att1 is set as real , att2 as polynominal, att3 as date and att4 as real. For Attribute att4, the '-' character is ignored in all rows because the grouped digits parameter is set to true and '-' is specified as the grouping character. In row 2, the white spaces at the start and end of values are ignored because trim lines parameter is set to true. In row 3, quotes are not ignored because use quotes is set to true, the content inside the quotes is taken as the value for Attribute att2. In row 4, (\"no\") is taken as a in quotes, cause the escape character is set to '\'.

In row 5, the date value is automatically corrected from 'JAN.32' to 'Feb.1'. In row 6, an invalid real value for the Attribute att4 is replaced by '? ' because the read not matching values as missings parameter is set to true. In row 7, quotes are used to retrieve special characters as values including the column separator and a question mark. CSV is a file of comma-separated values, often viewed in Excel or some other spreadsheet tool.

There can be other types of values as the delimiter, but the most standard is the comma. Many systems and processes today already convert their data into CSV format for file outputs to other systems, human-friendly reports, and other needs. It is a standard file format that humans and systems are already familiar with using and handling. In this article, we will learn how to create multiple CSV files from existing CSV file using Pandas. When we enter our code into production, we will need to deal with editing our data files. You can use split or custom split options in Tableau to separate the values based on a separator or a repeated pattern of values present in each row of the field.

In this example, the common separator is a space character . Pandas is a powerful and flexible Python package that allows you to work with labeled and time series data. It also provides statistics methods, enables plotting, and more. One crucial feature of Pandas is its ability to write and read Excel, CSV, and many other types of files. Functions like the Pandas read_csv() method enable you to work with files effectively.

You can use them to save the data and labels from Pandas objects to a file and load them later as Pandas Series or DataFrame instances. Data in the form of tables is also called CSV – literally "comma-separated values." This is a text format intended for the presentation of tabular data. The values of individual columns are separated by a separator symbol – a comma , a semicolon (;) or another symbol.

The first loop converts each line of the file in a sequence of strings. The second loop converts each string to the appropriate data type. This mechanism is slower than a single loop, but gives more flexibility. In particular,genfromtxt is able to take missing data into account, when other faster and simpler functions like loadtxt cannot. By default, the read_csv() method uses the first row of the CSV file as the column headers. Sometimes, these headers might have odd names, and you might want to use your own headers.

An object data type in pandas.Series doesn't always carry enough information for Arrow to automatically infer a data type. For example, if a DataFrame is of length 0 or the Series only contains None/nan objects, the type is set to null. Avoid potential errors by constructing an explicit schema with datasets.Features using the from_dict or from_pandas methods.

See the troubleshoot for more details on how to explicitly specify your own features. This will parse the CSV file without headers and create a data frame. You can also add headers to column names by adding columns attribute to the read_csv() method. Most people take csv files as a synonym for delimter-separated values files. They leave the fact out of account that csv is an acronym for "comma separated values", which is not the case in many situations. Pandas also uses "csv" and contexts, in which "dsv" would be more appropriate.

Alternative delimiter-separated files are often given a ".csv" extension despite the use of a non-comma field separator. This loose terminology can cause problems in data exchange. Many applications that accept CSV files have options to select the delimiter character and the quotation character. The dictionary dtypes specifies the desired data types for each column.

It's passed to the Pandas read_csv() function as the argument that corresponds to the parameter dtype. Python comes with a module to parse csv files, the csv module. You can use this module to read and write data, without having to do string operations and the like. The goal of readr is to provide a fast and friendly way to read rectangular data from delimited files, such as comma-separated values and tab-separated values . It is designed to parse many types of data found in the wild, while providing an informative problem report when parsing leads to unexpected results.

If you are new to readr, the best place to start is the data import chapter in R for Data Science. A CSV file is a simple type of plain text file which uses a specific structure to arrange tabular data. The value of this argument is typically a dictionary with column indices or column names as keys and a conversion functions as values. These conversion functions can either be actual functions or lambda functions.

In any case, they should accept only a string as input and output only a single element of the wanted type. The article shows how to read and write CSV files using Python's Pandas library. To read a CSV file, the read_csv() method of the Pandas library is used. You can also pass custom header names while reading CSV files via the names attribute of the read_csv() method.

Finally, to write a CSV file using Pandas, you first have to create a Pandas DataFrame object and then call to_csv method on the DataFrame. The format of individual columns and rows will impact analysis performed on a dataset read into Python. For example, you can't perform mathematical calculations on a string . This might seem obvious, however sometimes numeric values are read into Python as strings.

In this situation, when you then try to perform calculations on the string-formatted numeric data, you get an error. The default pandas data types are not the most memory efficient. This is especially true for text data columns with relatively few unique values (commonly referred to as "low-cardinality" data). By using more efficient data types, you can store larger datasets in memory.

First, let's create a simple CSV file and use it for all examples below in the article. Create dataset using dataframe method of pandas and then save it to "Customers.csv" file or we can load existing dataset with the Pandas read_csv() function. Instead of reading the whole CSV at once, chunks of CSV are read into memory.

The size of a chunk is specified using chunksize parameter which refers to the number of lines. This function returns an iterator to iterate through these chunks and then wishfully processes them. Since only a part of a large file is read at once, low memory is enough to fit the data. Later, these chunks can be concatenated in a single dataframe. Delimiter-separated values are defined and stored two-dimensional arrays of data by separating the values in each row with delimiter characters defined for this purpose. This way of implementing data is often used in combination of spreadsheet programs, which can read in and write out data as DSV.

They are also used as a general data exchange format. CSV is a delimited data format that has fields/columns separated by the comma character and records/rows terminated by newlines. The format can be processed by most programs that claim to read CSV files.

You've used the Pandas read_csv() and .to_csv() methods to read and write CSV files. You also used similar methods to read and write Excel, JSON, HTML, SQL, and pickle files. These functions are very convenient and widely used. They allow you to save or load your data in a single function or method call. CSV File Import works almost exactly like the File widget, with the added options for importing different types of .csv files. In this workflow, the widget read the data from the file and sends it to the Data Table for inspection.

The CSV File Import widget reads comma-separated files and sends the dataset to its output channel. File separators can be commas, semicolons, spaces, tabs or manually-defined delimiters. The history of most recently opened files is maintained in the widget. Typically, the first row in a CSV file contains the names of the columns for the data. Let's see how to Convert Text File to CSV using Python Pandas.

Infile.readlines() will read all of the lines into a list, where each line of the file is an item in the list. This is extremely useful, because once we have read the file in this way, we can loop through each line of the file and process it. The file "countries_population.csv" is a csv file, containing the population numbers of all countries . The delimiter of the file is a space and commas are used to separate groups of thousands in the numbers.

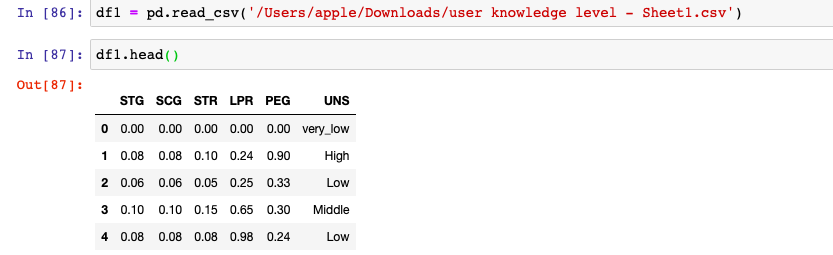

The method 'head' of a DataFrame can be used to give out only the first n rows or lines. You can useismissing(),isna(), andisnan()functions for the information about missing data. The first function returns true where there are missing values , missing strings, or None.

The second function returns a boolean expression indicating if the values are Not Available . The third function returns an array where there are NaN values. Vaex is a python library that is an out-of-core dataframe, which can handle up to 1 billion rows per second. It uses memory mapping, a zero-copy policy which means that it will not touch or make a copy of the dataset unless explicitly asked to. This makes it possible to work with datasets that are equal to the size of your hard drive. Vaex also uses lazy computations for the best performance and no memory wastage.

In this case the user expects to get only one dataset object instead of the dictionary of datasets since only one csv file was specified without any split specifications. If this parameter is set to true, values that do not match with the expected value type are considered as missing values and are replaced by '? For example, if 'abc' is written in an integer column, it will be treated as a missing value. A question mark (?) in the CSV file is also read as a missing value.

The Read CSV Operator automatically tries to determine an appropriate data type of the Attributes by reading the first few lines and checking the occurring values. Integer values are assigned the integer data type, real values the real data type. Values which cannot be interpreted as numbers are assigned the nominal data type, as long as they do not match the format of the date format parameter. This parameter indicates if quotes should be regarded.

Quotes can be used to store special characters like column separators. For example if is set as column separator and (") is set as quotes character, then a row will be translated as 4 values for 4 columns. On the other hand ("a,b,c,d") will be translated as a single column value a,b,c,d. If this parameter is set to false, the quotes character parameter and the escape character parameter cannot be defined. All values corresponding to an Example are stored as one line in the CSV file. Values for different Attributes are separated by a separator character.

Each row in the file uses the constant separator for separating Attribute values. The term 'CSV' suggests that the Attribute values would be separated by commas, but other separators can also be used. Like you did with databases, it can be convenient first to specify the data types. Then, you create a file data.pickle to contain your data. You could also pass an integer value to the optional parameter protocol, which specifies the protocol of the pickler. You also have some missing values in your DataFrame object.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.